How do we ascribe meaning to objects and actions in the open world to make safe, goal-aligned decisions?

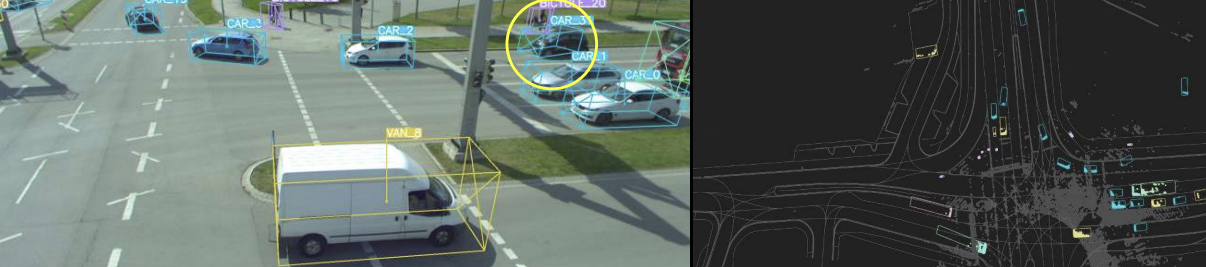

Humans and machines interpret a variety of representations of the environment. Our eyes and ears, and robots' sensors, provide high-dimensional visual and audio representations of the world. At some point, these representations are compressed such that we can make meaningful decisions. This compression can be expressed in natural language, or even through our actions and behaviors.

But, beyond representation, humans also must use imagination to predict and plan. How can we enable machines to forecast, explore, and interact in open environments? Can we do so in a way which is robustly safe and highly adaptable to chaotic human impulses? These questions drive our research, expanding the capabilities of modern autonomous vehicles, robots, and human-interactive intelligent systems.

The Mi3 Lab at UC Merced researches machine intelligence, interaction, and imagination to build a future of increased safety and meaningful interactions between people and technology.

We addresses questions such as:

How can autonomous vehicles safely interact with first responders?

How can autonomous systems make mobility safe and accessible for all?

How can autonomous systems make sense of both verbal and non-verbal communication from people?

Research Areas:

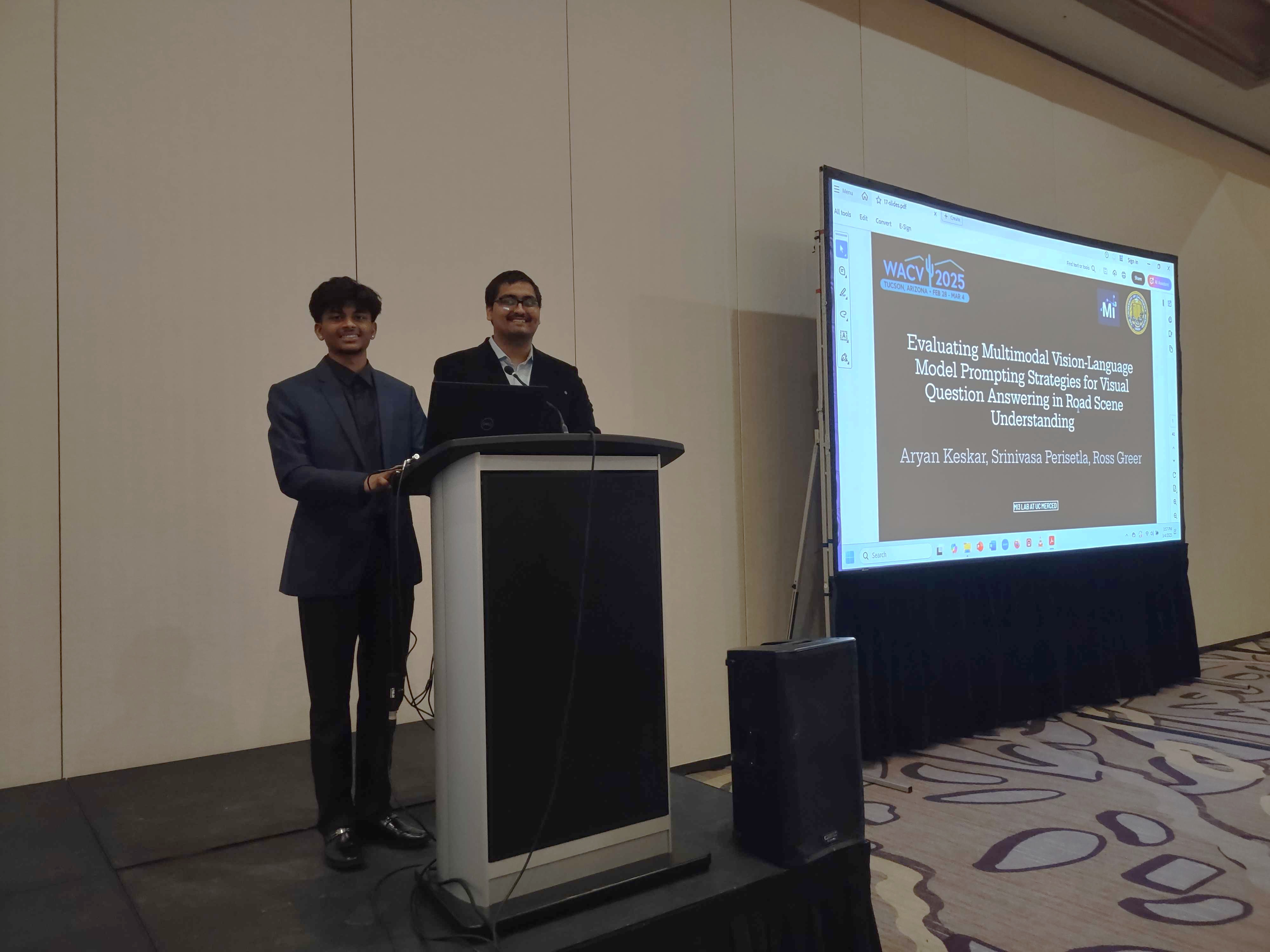

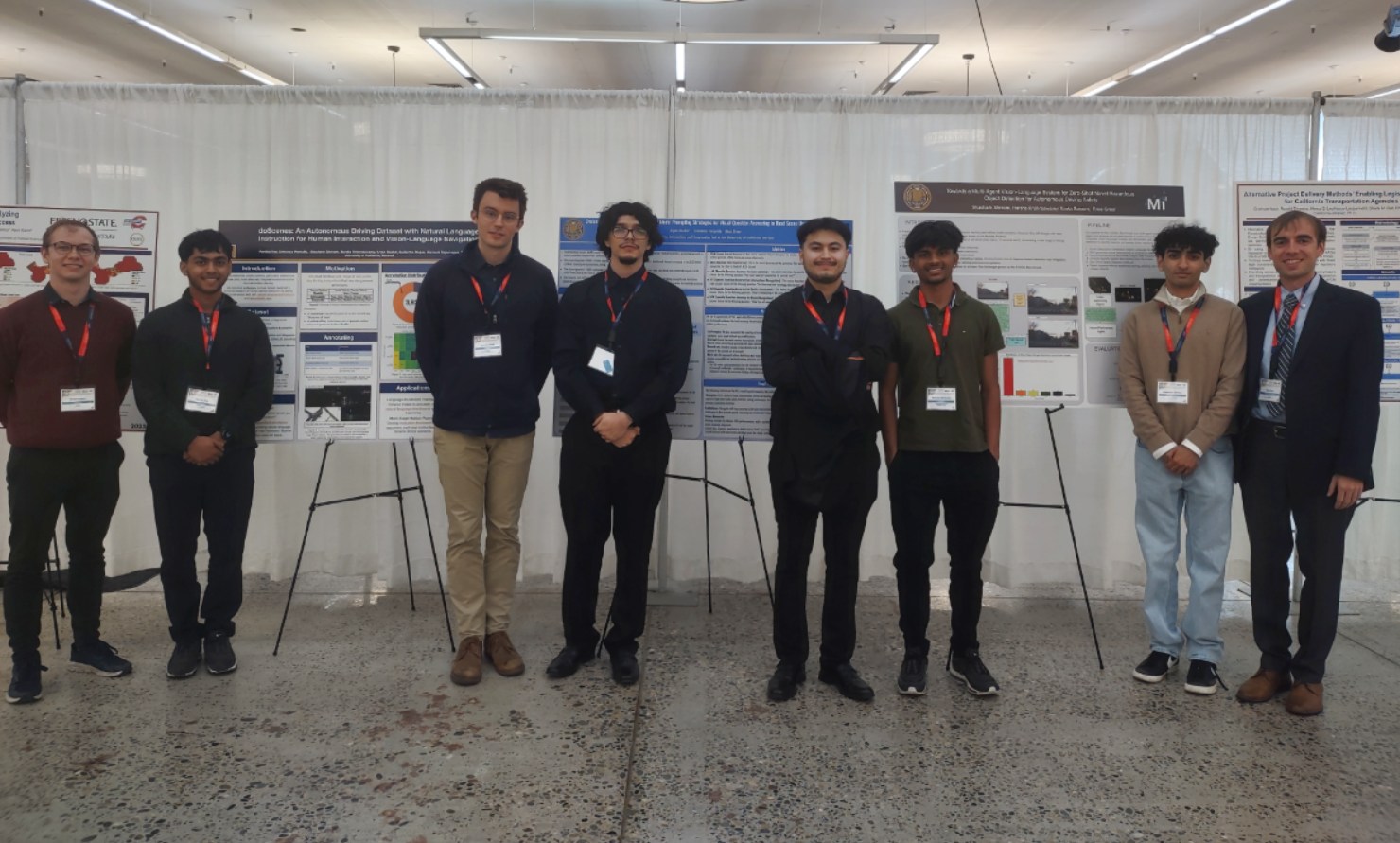

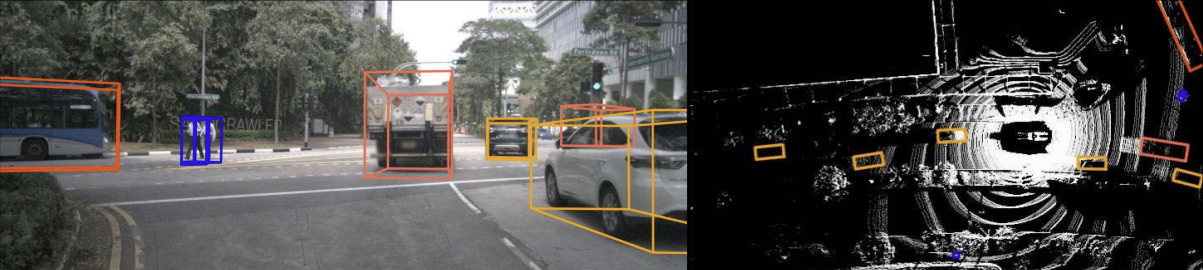

Artificial Intelligence | Computer Vision | Multimodal Machine Learning | Robotics | Human-Computer & Human-Robot Interaction

Salience & Attention | Vision-Language Representations | Explainability | Adaptability | Novelty | Validation and Safety

Application Domains:

-

Safe Autonomous Driving & Intelligent Transportation Systems

-

Robotic Perception and Planning

-

Human-Machine Communication, Interaction, and Co-Creativity

Collaborators